Plt.plot(accuracy_total_val, label= 'Test Accuracy') plt.plot(accuracy_total_train, label= 'Training Accuracy')

Torch nn sequential get layers code#

It is as simple as the code for plotting the loss. Let’s check our training and validation accuracy. Plt.grid() Code language: JavaScript ( javascript ) Output: Plt.plot(test_losses, label= 'Test loss')

plt.plot(train_losses, label= 'Training loss') The most crucial method is to set model.eval() when you want to test your network to avoid updating the gradient during testing and when you want to start training, set ain(), so your weights may be updated. Then we run a backward pass by loss.backward() and optimizer.step() which updates our parameters based on the current gradient.īy training our network we may also test our model to see how it’s performing after each epoch. Which will prevent our network from learning properly. This is because every time a variable is backpropagated through the network multiple times, the gradient will be accumulated instead of being replaced from the previous training step in our current training step. We first need to set our gradient to zero: optimizer.zero_grad(). Plt.title( "Ground Truth Label: ".format(accuracy_val)) Code language: PHP ( php ) Output:Įpoch: 0/20 Training loss: 0.7024 Testing loss: 0.2356 Train accuracy: 0.7891 Test accuracy: 0.9298 Epoch: 5/20 Training loss: 0.0928 Testing loss: 0.0566 Train accuracy: 0.9715 Test accuracy: 0.9815 Epoch: 10/20 Training loss: 0.0621 Testing loss: 0.0411 Train accuracy: 0.9806 Test accuracy: 0.9866 Epoch: 15/20 Training loss: 0.0504 Testing loss: 0.0357 Train accuracy: 0.9846 Test accuracy: 0.9878Īfter the forward pass and the loss, computation is done, we do a backward pass, which refers to the process of learning and updating the weights.

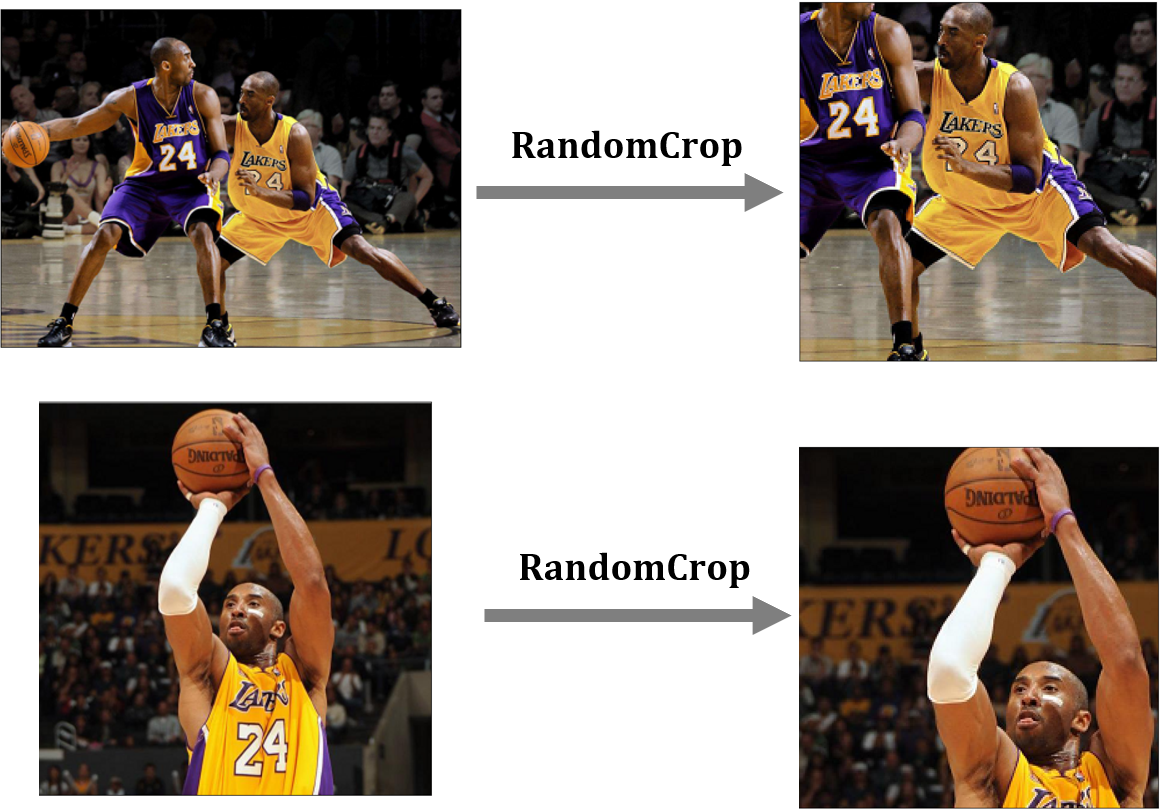

28 by 28 pixel: the shape of these images so we can visualize it.īy visualizing we can see our images have nicely drawn numbers.We can see the shape as \(64 \times 1 \times 28 \times 28 \). Now, let’s turn our trainloader object into an iterator with iter so we may access our images and labels from this generator. Print(type(images)) # Checking the datatype print(images.shape) # the size of the image print(labels.shape) # the size of the labels Code language: PHP ( php ) Output: TorchVision offers a lot of handy transformations, such as cropping e.t.c training_data = enumerate(trainloader)īatch_idx, (images, labels) = next(training_data) The values 0.1307 and 0.3081 used for transforms.Normalize() transformation represents the global mean and standard deviation of the MNIST dataset and transforms.ToTensor() converts the entire array into torch tensor and divides by 255. We will use a batch_size of 64 for the training. In order to load the MNIST dataset in a handy way, we will need DataLoaders for the dataset.

Once this cell is executed, our dataset is downloaded and stored in the variable train_set and test_set. ValidationLoader = (validation_set, batch_size= 64, shuffle= True) Code language: PHP ( php ) Validation_set = datasets.MNIST( 'DATA_MNIST/', download= True, train= False, transform=transform)

TrainLoader = (train_set, batch_size= 64, shuffle= True) Train_set = datasets.MNIST( 'DATA_MNIST/', download= True, train= True, transform=transform) Load MNIST Dataset from TorchVision # Define transform to normalize data

0 kommentar(er)

0 kommentar(er)